Machine learning models need a reliable hosting platform. AWS is a popular choice.

Also Read

Hosting machine learning models on AWS offers many advantages. AWS provides scalable, secure, and cost-effective solutions. It supports various machine learning frameworks and tools. You can deploy models quickly and manage them easily. AWS’s infrastructure can handle large-scale deployments. This ensures your models run smoothly, even under heavy loads.

In this blog post, we will explore how to host machine learning models on AWS. You will learn about the benefits, steps, and best practices. Whether you are a beginner or an expert, this guide will help you make the most of AWS for your machine learning needs. Dive in to discover the potential of AWS for your models.

Introduction To Aws For Machine Learning

Machine learning is transforming industries. Hosting models on the right platform is crucial. AWS, Amazon Web Services, offers robust solutions for machine learning. This platform provides tools to build, train, and deploy models efficiently. Let’s delve into why AWS is the preferred choice for machine learning and explore the key services it offers.

Why Choose Aws?

AWS is renowned for its scalability. You can start small and expand as needed. This flexibility helps manage resources effectively. AWS also ensures high availability. Your applications remain accessible without interruption. Security is another strong point. AWS provides advanced security features to protect your data. These aspects make AWS a reliable choice for hosting machine learning models.

Key Aws Services For Ml

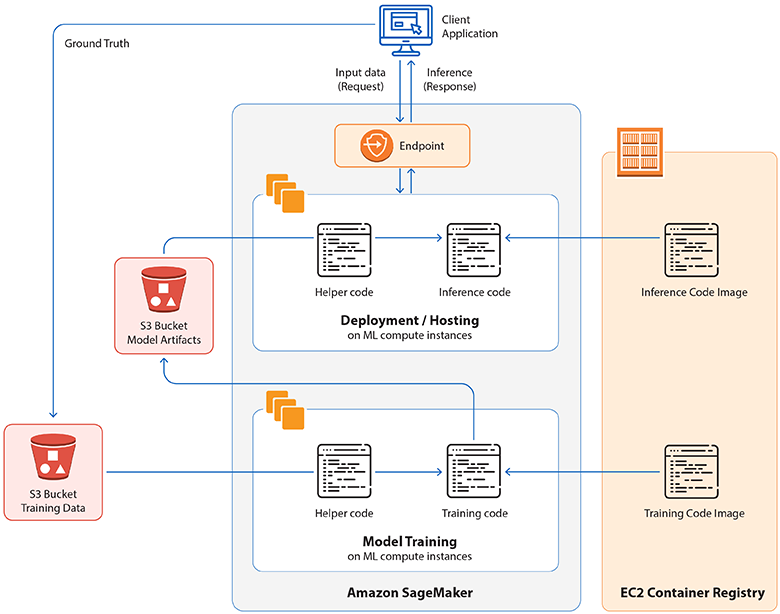

AWS offers several services tailored for machine learning. Amazon SageMaker is a comprehensive service to build, train, and deploy models. It supports various frameworks like TensorFlow and PyTorch. AWS Lambda allows you to run code without managing servers. This service is ideal for lightweight ML tasks. Amazon EC2 offers customizable virtual servers. You can choose the instance type that suits your needs. AWS Glue simplifies data preparation. It extracts, transforms, and loads data for analysis. Finally, Amazon S3 provides scalable storage. It ensures your data is stored securely and is always available.

Setting Up Your Aws Account

Hosting machine learning models on AWS can seem complex. But setting up an AWS account is the first step. You need a solid foundation to manage your models effectively. This guide will help you get started with ease.

Creating An Aws Account

To begin, visit the AWS website and click on “Create an AWS Account.” You will need to provide basic information. This includes your name, email, and a password. Make sure to use a strong password for security.

Next, enter your payment information. AWS requires this even if you plan to use the free tier. Don’t worry, you won’t be charged as long as you stay within the free tier limits. After this, you need to verify your identity. AWS will send a text message or call to confirm your phone number.

Once verified, choose a support plan. The Basic plan is free and sufficient for most users. Finally, review your details and complete the account setup. Your AWS account is now ready.

Understanding Aws Free Tier

The AWS Free Tier is a great way to explore AWS services. It offers limited access to many services for free. You can use these services for 12 months from the account creation date.

The Free Tier includes various services. For example, Amazon EC2 offers 750 hours of Linux and Windows t2.micro instances each month. Amazon S3 offers 5GB of standard storage. These resources are often enough for small projects and experimentation.

Be mindful of the limits to avoid charges. Monitor your usage through the AWS Management Console. AWS provides tools to help you track and manage your free tier usage.

By understanding the Free Tier, you can make the most of your AWS account. This knowledge helps you use AWS services efficiently without incurring unexpected costs.

Preparing Your Machine Learning Model

Preparing your machine learning model is a critical step before hosting it on AWS. This process ensures your model is ready to handle real-world data and perform as expected. Below, we will walk through the steps involved in training and exporting your machine learning model.

Training Your Model

First, you need to gather and clean your data. This step is crucial for accurate results. Use tools like Pandas to handle missing values and outliers. Once your data is ready, choose an algorithm that fits your problem. Algorithms such as linear regression or decision trees are common choices. Train your model using a subset of your data. This subset is known as the training set. Evaluate the performance on the validation set. Adjust your model if needed.

Exporting Your Model

After training, you need to export your model. This makes it ready for deployment on AWS. Save the model in a format that AWS supports. Formats like ONNX or TensorFlow SavedModel are popular choices. Ensure you include all necessary files. This may include the model itself and any required metadata. Store these files in an S3 bucket. This makes it easy to access your model from other AWS services.

Credit: www.softwebsolutions.com

Choosing The Right Aws Service

Choosing the right AWS service for hosting machine learning models can be challenging. AWS offers various options, each with its strengths. Selecting the best service depends on your project’s needs, budget, and technical skills. Below, we will explore three popular AWS services: SageMaker, EC2 Instances, and Lambda Functions.

Sagemaker

SageMaker is a managed service designed for developers. It simplifies building, training, and deploying machine learning models. SageMaker handles much of the underlying infrastructure. This allows you to focus on your model. It supports many popular frameworks like TensorFlow and PyTorch. SageMaker also offers built-in algorithms. These can save time and effort. If you need a comprehensive, managed solution, SageMaker is a great choice.

Ec2 Instances

EC2 Instances provide flexibility and control. You can choose the instance type that fits your needs. This includes CPU or GPU options. EC2 is ideal for custom environments and specific configurations. You manage the setup, which gives you control over every detail. This option suits experienced developers. It offers scalability and performance for complex models. Pricing is pay-as-you-go, which helps manage costs.

Lambda Functions

Lambda Functions are for serverless computing. They allow you to run code without provisioning servers. Lambda is event-driven and scales automatically. It is best for lightweight models and real-time predictions. You only pay for execution time. This can reduce costs for infrequent tasks. Lambda integrates with other AWS services. This makes it easy to build complex workflows. For small-scale or sporadic tasks, Lambda is efficient and cost-effective.

Deploying Your Model With Sagemaker

Amazon SageMaker is a powerful tool for deploying machine learning models. It simplifies the process, making it accessible even for beginners. With SageMaker, you can train, deploy, and scale your models seamlessly.

Creating A Sagemaker Notebook Instance

Start by creating a SageMaker notebook instance. This is your workspace for building and training models. Go to the AWS Management Console. Navigate to Amazon SageMaker. Click on “Notebook instances”. Choose “Create notebook instance”. Fill in the details. Select an instance type that fits your needs. Configure the IAM role. This allows SageMaker to access other AWS services. Finally, click “Create notebook instance”. Your notebook instance will be ready in a few minutes.

Training And Deploying A Model

Once your notebook instance is ready, open it. You can now start training your model. Upload your dataset to an S3 bucket. Load the dataset into your notebook. Use SageMaker’s built-in algorithms or your custom code. Train the model using the training data.

After training, deploy the model. Create an endpoint in SageMaker. This endpoint will serve your model. You can now make predictions by sending data to this endpoint. SageMaker handles the underlying infrastructure. It scales your endpoint based on the traffic. This ensures high availability and low latency.

Deploying Your Model With Ec2

Deploying your machine learning model on AWS using EC2 can be a game-changer. It offers flexibility, scalability, and control. You can manage your resources and customize your environment. This section will guide you through the steps to deploy your model on an EC2 instance.

Setting Up An Ec2 Instance

First, log in to your AWS Management Console. Navigate to the EC2 Dashboard. Click on “Launch Instance”. Choose an Amazon Machine Image (AMI). For machine learning, the Deep Learning AMI is a good choice. Select an instance type. Ensure it meets your model’s needs. Click “Next: Configure Instance Details”. Configure your instance as needed. Click “Next: Add Storage”. Add storage based on your data size. Click “Next: Add Tags”. Add tags to identify your instance. Click “Next: Configure Security Group”. Set up security rules to allow access. Click “Review and Launch”. Review your settings and launch your instance.

Installing Required Software

Once your instance is running, connect to it via SSH. You need to install the necessary software. Update your package manager first. Run: sudo apt-get update. Install Python and pip with: sudo apt-get install python3-pip. Then, install the required libraries. Common ones include TensorFlow, PyTorch, and Scikit-learn. Use pip to install them. For example: pip3 install tensorflow. Ensure all dependencies are installed. Finally, upload your model files to the instance. Use SCP or any file transfer method. Your EC2 instance is now ready for deployment.

Deploying Your Model With Lambda

Deploying machine learning models on AWS provides scalability and efficiency. Using AWS Lambda, you can deploy models without managing servers. This approach ensures quick responses and cost-effectiveness.

Creating A Lambda Function

First, navigate to the AWS Management Console. Select Lambda from the services menu. Click the “Create function” button. Choose the “Author from scratch” option.

Enter a name for your function. Select Python as the runtime. Click the “Create function” button. Your Lambda function is now ready for configuration.

Next, upload your model files and dependencies. Use the AWS CLI or the Lambda console. Ensure your code loads the model properly. Test your function to confirm it works.

Integrating With Api Gateway

API Gateway allows you to expose your Lambda function as an API. In the AWS Management Console, navigate to API Gateway. Click “Create API” and choose “REST API”.

Enter a name for your API and click “Create API”. Select “Actions” and choose “Create Resource”. Enter a name for the resource and click “Create Resource”.

Next, create a method for the resource. Select “Actions” and choose “Create Method”. Choose “POST” and click the check mark. Select “Lambda Function” as the integration type. Enter the name of your Lambda function and click “Save”.

Finally, deploy the API. Select “Actions” and choose “Deploy API”. Create a new stage and click “Deploy”. Your API endpoint is now live.

Monitoring And Managing Your Deployment

Hosting machine learning models on AWS can be a powerful solution. It offers scalability, flexibility, and reliability. But, it’s crucial to monitor and manage your deployment effectively. This ensures your models perform well and stay operational. In this section, we will explore how to use AWS tools for monitoring and managing your deployment.

Using Cloudwatch

AWS CloudWatch is an essential tool for monitoring your machine learning models. It helps you track metrics and log files. You can set alarms and respond to changes in your deployment. CloudWatch provides real-time data, which is vital for maintaining performance.

To start, set up CloudWatch to collect metrics from your deployment. This includes CPU usage, memory, and network traffic. You can create dashboards to visualize these metrics. This makes it easier to spot trends and anomalies. Use alarms to notify you of any issues. For example, if your model’s latency increases, you will get an alert.

Scaling Your Deployment

Scaling is crucial for handling varying workloads. AWS offers several options to scale your deployment. You can use Auto Scaling to adjust resources automatically. This ensures your deployment can handle traffic spikes and reduces costs during low traffic periods.

To set up Auto Scaling, define scaling policies. These policies determine when to add or remove resources. For example, you can scale out when CPU usage exceeds 70%. AWS will then add more instances to handle the load. Similarly, you can scale in when CPU usage drops below 30%.

Using Elastic Load Balancing (ELB) improves your deployment’s availability. ELB distributes incoming traffic across multiple instances. This helps to prevent any single instance from becoming a bottleneck.

Here is a simple table to summarize the key points:

| Feature | Purpose |

|---|---|

| CloudWatch | Monitor metrics and logs, set alarms |

| Auto Scaling | Adjust resources automatically based on demand |

| Elastic Load Balancing | Distribute traffic to improve availability |

Key Takeaways:

- Use CloudWatch to monitor and visualize metrics.

- Set up alarms for quick issue detection.

- Implement Auto Scaling to manage resource usage.

- Use ELB to distribute traffic and improve availability.

Security Best Practices

Hosting machine learning models on AWS can be powerful and efficient. Yet, ensuring the security of your models and data is crucial. Here are some best practices to keep your AWS environment secure.

Iam Roles And Permissions

Using IAM roles and permissions is vital. It helps control who can access your AWS resources. Create roles with the least privileges. Only grant permissions necessary for the role.

Here is a simple example of an IAM policy that allows read-only access to S3:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::example-bucket",

"arn:aws:s3:::example-bucket/"

]

}

]

}

This policy restricts access to read-only. It avoids unintended changes or deletions.

Data Encryption

Data encryption is another key practice. Encrypt data at rest and in transit. AWS offers several encryption tools.

For data at rest, use AWS Key Management Service (KMS). Here is a quick guide:

- Create a KMS key in the AWS Management Console.

- Use the KMS key ID to encrypt data in S3 or RDS.

For data in transit, use Secure Socket Layer (SSL) or Transport Layer Security (TLS). Enable HTTPS on your web applications. Here is a sample of enabling HTTPS in an AWS Elastic Load Balancer:

- Go to the Load Balancers section in the AWS Management Console.

- Select your load balancer and edit the listeners.

- Add an HTTPS listener and configure the SSL certificate.

These steps ensure your data remains secure during transmission.

By following these security best practices, you can protect your machine learning models and data on AWS.

Cost Management

Hosting machine learning models on AWS can be cost-effective. But managing costs is crucial for long-term sustainability. Effective cost management ensures you are not overspending, and helps in utilizing resources efficiently. Let’s explore how to manage costs effectively.

Estimating Costs

Before deploying models, it’s important to estimate the costs involved. AWS provides several tools to help with this. Use the AWS Pricing Calculator to forecast expenses. This tool helps you understand the costs associated with different AWS services. For instance, the costs for:

- Compute resources (EC2 instances)

- Storage (S3, EBS)

- Data transfer

Here’s a simple table to illustrate cost components:

| Service | Cost per Month |

|---|---|

| EC2 Instances | $100 |

| S3 Storage | $50 |

| Data Transfer | $20 |

Analyze these costs to make informed decisions. This will help in avoiding unexpected bills.

Optimizing Expenditure

After estimating costs, the next step is to optimize expenditure. Here are a few tips:

- Right-size resources: Choose the right instance types and sizes. Avoid over-provisioning.

- Use Spot Instances: These instances are cheaper than On-Demand instances. Ideal for non-critical workloads.

- Leverage Reserved Instances: Commit to a one or three-year term to get discounts.

- Monitor usage: Regularly check usage patterns. Identify and eliminate underused resources.

Consider this code snippet to automate cost monitoring:

import boto3

def get_ec2_costs():

client = boto3.client('ce')

response = client.get_cost_and_usage(

TimePeriod={'Start': '2023-01-01', 'End': '2023-01-31'},

Granularity='MONTHLY',

Metrics=['BlendedCost'],

GroupBy=[{'Type': 'DIMENSION', 'Key': 'SERVICE'}]

)

return response

costs = get_ec2_costs()

print(costs)

Such automation helps in tracking and managing costs effectively. Regular monitoring and adjustments can lead to significant savings.

Conclusion And Next Steps

Deploying machine learning models on AWS offers scalability and efficiency. Start small and gradually optimize your setup. Regularly monitor performance and costs to ensure optimal results.

Hosting machine learning models on AWS can be a powerful solution. It offers scalability, flexibility, and various tools to support your models. As you move forward, it’s important to recap key points and explore further learning resources.Recap Of Key Points

First, AWS provides a range of services like SageMaker, EC2, and Lambda. These services help in building, training, and deploying machine learning models. SageMaker offers an integrated environment for model development. EC2 allows for flexible computing resources. Lambda supports serverless deployment. Second, security and cost management are crucial. AWS provides tools to ensure data security and cost efficiency. IAM roles help in managing permissions. Cost Explorer helps in tracking expenses. Third, monitoring and maintenance are essential. Use AWS CloudWatch for real-time monitoring. Regularly update and retrain models to keep them effective.Further Learning Resources

To deepen your understanding, explore the following resources: – AWS Machine Learning Blog: Offers tutorials and case studies. – AWS Training and Certification: Provides courses and certifications. – GitHub Repositories: Access sample projects and code snippets. – Online Communities: Join forums like Stack Overflow and AWS Developer Forums. These resources will help you stay updated and improve your skills. Keep learning and experimenting with AWS tools. This will enhance your ability to host machine learning models effectively. “`

Credit: www.youtube.com

Credit: www.youtube.com

Frequently Asked Questions

What Is Aws For Machine Learning?

AWS provides a robust platform for deploying and managing machine learning models. It offers services like SageMaker for building, training, and deploying models efficiently.

How To Deploy A Model On Aws?

Deploying a model on AWS involves using SageMaker. You train the model, create an endpoint, and then deploy it for real-time predictions.

Why Use Aws For Machine Learning?

AWS offers scalability, flexibility, and a wide range of tools. These features make it easier to build, train, and deploy machine learning models.

What Are The Costs For Hosting Ml Models On Aws?

Costs vary based on usage, storage, and compute power. AWS provides a pricing calculator to estimate expenses for hosting your models.

Conclusion

Hosting machine learning models on AWS offers many benefits. It’s scalable and reliable. You can easily manage resources. Cost efficiency is another plus. AWS provides many tools for developers. Security features ensure data protection. Technical support is available if needed.

Overall, AWS simplifies machine learning deployment. It’s a smart choice for businesses. Start your journey with AWS today.